Beyond text: The next wave of AI interfaces

Three interface models for building more intuitive AI experiences

LLMs have come a long way in understanding intent, but our interactions with it still feel stuck in the past. We type prompts, delete, and retype. Not because AI can’t understand us, but because we’re not always sure what’s possible or what will get the result we’re looking for in the first place. When AI’s capabilities are locked behind a text box, discovery becomes trial and error.

Even with today’s AI tools like GPT, Claude, Bolt.new or Midjourney users still need to experiment with prompts until something works. Many of these tools are addressing this by providing more contextual prompts, suggestions, or interactive elements, but there's still significant room for improvement.

As product builders, designers, and thinkers, we're uniquely positioned to rethink AI interactions. How can we make collaborating with AI feel more natural and intuitive?

Historical parallel: From text adventures to modern games

We've seen this interaction pattern evolve before with gaming interfaces. Early games relied entirely on text-based commands. Players had to type every action they wanted to do in plain text, hoping the system would understand. As computing power improved, games introduced static visuals, then point-and-click menus, and eventually full 3d environments with contextual interactions.

The gaming interface evolution

1. Text commands (Zork, 1980)

In the early 80’s, games like Zork relied entirely on typed commands:

> go north

You enter a dimly lit cavern.

> examine torch

The torch flickers with a warm glow.

These interfaces required players to:

Translate what they wanted to do into a specific syntax, with no guidance if an incorrect command was used.

Guess available commands, often through frustrating trial-and-error.

Mentally visualize environments (which is actually quite fun, but you still had to remember all the keyboard commands as well).

Learn specific, sometimes arbitrary commands like “take lamp” instead of “pick up light”.

Today's AI interactions share many of these frustrations. Users still struggle to predict what prompts will produce desired results, or they may just not know what an LLM can actually do. Users are left guessing at capabilities, often missing powerful features simply because they don't know to ask for them.

2. Text commands with static visuals (King's Quest, 1984)

King's Quest introduced visual representations of scenes in addition to typed commands. Players could see environments, characters, and objects. However, interaction still relied on typing, leaving users guessing at what their character could do.

3. Point-and-click interfaces (Monkey Island, 1990)

Games like Monkey Island further simplified interactions by letting players directly click on the interface. Instead of guessing what commands worked, players selected from a menu of actions like "look at" or "use", and then clicked objects in the scene. Cognitive load reduced drastically since players didn't have to guess available actions.

4. Early 3D environments with contextual menus (Ultima Online, 1997)

Ultima Online introduced early 3D spaces where you could interact with the environment using context-sensitive menus. Players interacted directly with objects and characters, and these contextual menus clarified what you could do. This approach balanced immersion with structured control, significantly lowering the barrier to entry and improving discoverability.

5. Immersive spatial interfaces with contextual interactions (World of Warcraft, 2004 onward)

Many games came before it, but games like World of Warcraft popularized immersive, spatial environments where players could interact directly with the game world. Rather than relying on typed commands or static menus, players navigated complex worlds using context-sensitive controls. They were able to click on objects, characters, or enemies and then trigger actions, with the interface adapting to the situation. This made it even easier to interact with the game world and translate what the player wanted to do.

Today, the most effective gaming interfaces blend multiple interaction styles: direct manipulation for common, intuitive tasks, and text-based shortcuts or commands for expert-level precision.

Each evolution in gaming interfaces reduced the mental translation between what players wanted to do and how they wanted to express it. AI interfaces are at a similar inflection point. The following approaches could help bridge that same gap between intention and interaction, making AI collaboration feel as natural as modern gaming feels today.

Three ways AI interfaces might evolve

1. Spatial interfaces: From chat to canvas

The evolution from text adventures to 3d worlds allowed players to navigate using spatial memory instead of typing directional commands. AI interfaces could help do the same by leveraging our innate spatial reasoning.

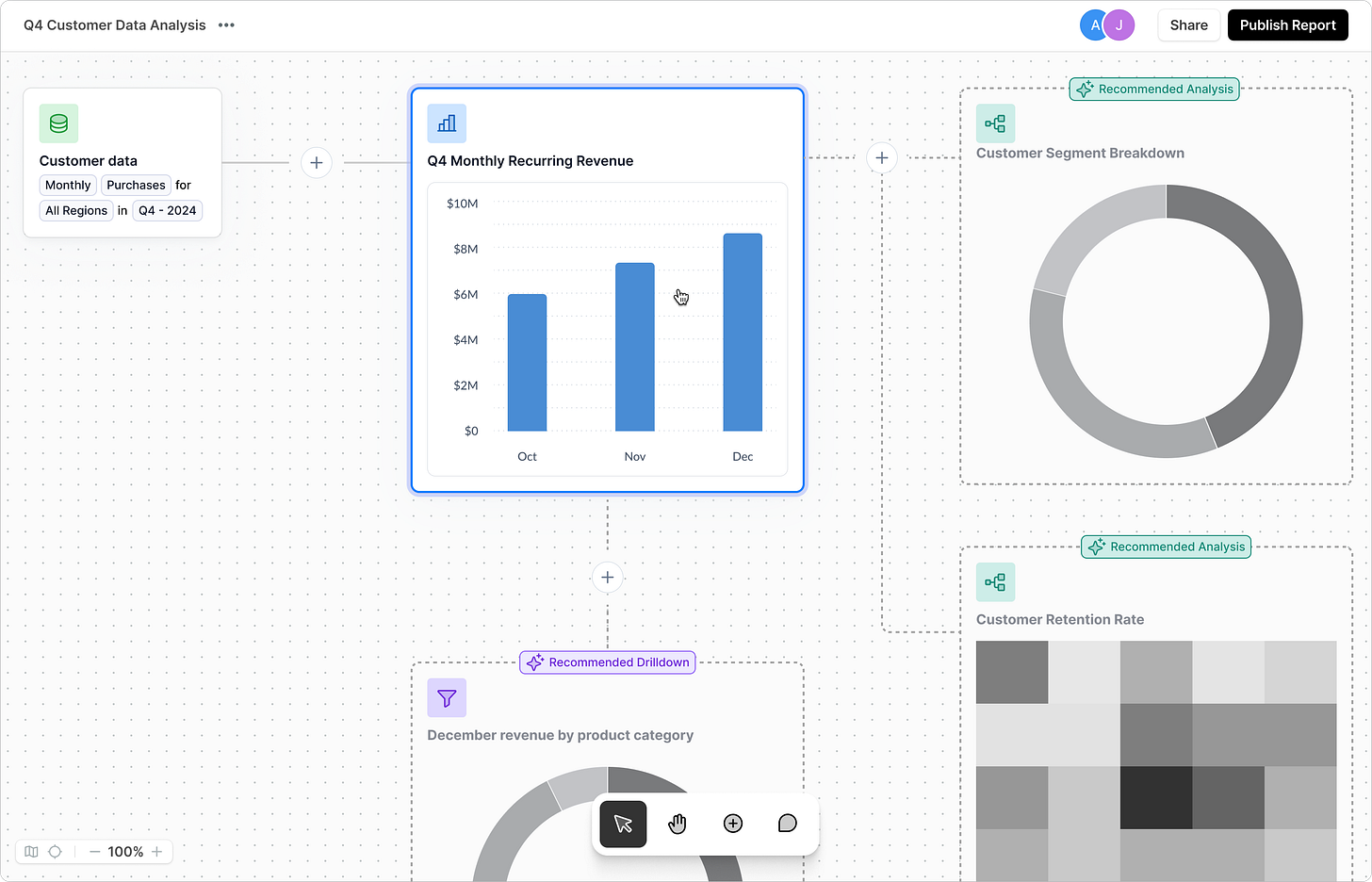

Imagine a multiplayer analytics workspace more like Figma for data than Tableau, where users can interact with data on a collaborative, infinite 2d canvas. Instead of dashboards, users can organize and interact with data visually, grouping insights naturally while AI suggests correlations based on spatial relationships.

Example: Collaborative data analysis

Current challenge: Data analysts share static dashboards that can quickly become outdated.

Spatial solution: A shared canvas where multiple analysts can simultaneously organize manual and AI-generated visualizations, annotate findings, and navigate complex data relationships.

Interaction shift: Instead of describing data needs through text prompts, analysts can physically arrange, group, and connect data elements to organize information. The AI learns from these spatial arrangements to suggest related insights or visualizations.

The evolution from chat to space

Just as games evolved from text commands to spatial worlds, this concept represents one area AI interactions can improve data analysis. Moving beyond the back-and-forth conversation model of chat interfaces, this spatial canvas highlights how users can navigate through data, visualizations and insights spatially.

Key elements of the design

Directional semantics

The layout could help create inherent meaning in these kinds of workspaces. For example:

Horizontal connections: Represent "Related Analysis", different perspectives on the same data, creating a natural flow where moving left-to-right explores alternative insights.

Vertical connections: Represent "Drilling Down", exploring deeper details within a specific area, where top-to-bottom movement reveals increasing levels of detail.

AI recommendations within space

Instead of interrupting with chat messages, AI can suggest what to analyze or explore next by surfacing recommendations as ghost outlines within the space. Users can choose to interact with AI suggestions or create their own visualizations and arrange insights to match their mental models.

Beyond traditional interfaces

Unlike chat interfaces where previous insights scroll away, this spatial environment keeps all insights visible and connected. Users can explore multiple analytical paths simultaneously rather than sequentially, with relationships between insights made clear through visual connections.

Compared to static dashboards, this canvas grows organically with proactive AI suggestions appearing in context. Information is arranged by grouping rather than a fixed layout, and multiple analysts can navigate and contribute to the shared space.

This approach turns AI from a chat-based assistant into a spatial thinking partner that enhances how we naturally organize information in space and removes the friction of translating goals into typed commands.

2. Ambient intelligence: AI that understands context

Modern games often use contextual awareness to anticipate player needs. For example, games like Valheim provide contextual hints via a raven to teach you how to do new things, but you’re also able to unlock new building or crafting recipes as you experiment and perform actions in the game. The Last of Us offers subtle hints when you’re stuck, suggesting actions without requiring you explicitly to ask for help.

AI could work the same way. It could proactively assist you by understanding context rather than waiting for commands. Imagine an AI-powered workspace or menubar app that helps you throughout your day. It could notify you before an important meeting and pull context from your tech stack to make sure you had relevant notes, insights, and resources ahead of your meetings. It could pull from your calendar to understand who you're meeting with and anticipate questions or information you might need. Your environment becomes actively helpful.

Instead of AI reacting to commands, Ambient intelligence continuously supports your workflow pulling in relevant information when you need it.

A persistent, contextual intelligence layer across all work.

Learns from habits (e.g., “You usually check PRDs before meetings”) and adjusts priorities dynamically.

This approach aggregates insights from the tools you use everyday like: Slack, Notion, Figma, Linear, and more.

Example: Meeting-aware assistant

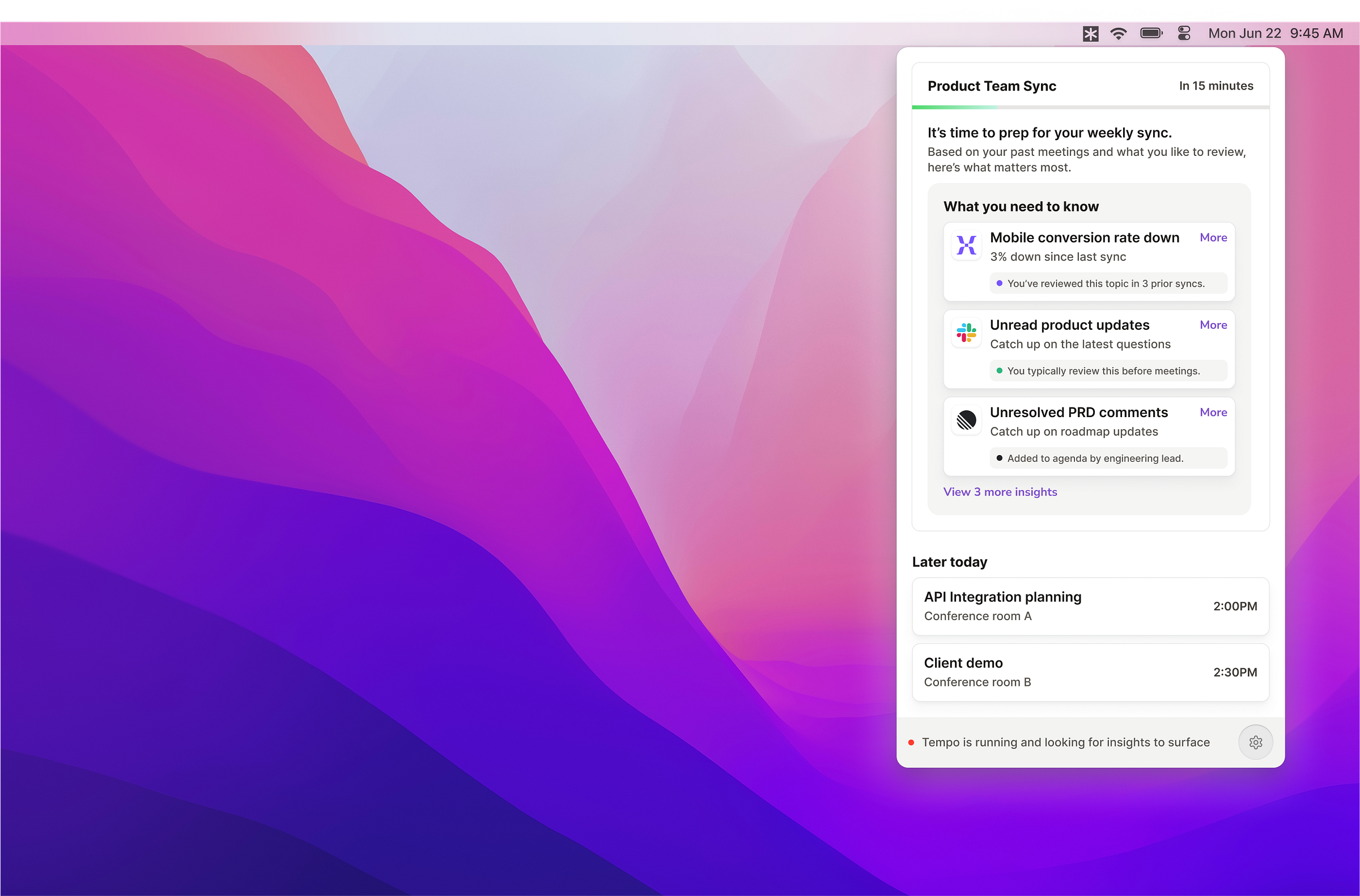

Imagine a menubar app called "Tempo" that sits quietly in your menu bar until it detects an upcoming meeting. Fifteen minutes before your product team sync, it proactively surfaces:

Documents referenced in previous meetings.

Recent Slack threads about key topics (like "5 unread messages about the search feature")

Synthesized insights like "Mobile conversion rate dropped 3% since last report"

Unlike traditional calendar notifications that remind you of a meeting's existence, this ambient intelligence layer could understand the meeting's context and your typical prep habits. The system recognizes recurring relationships between meeting types and information needs, then delivers precisely what you need when you need it, without you having to ask for it.

The evolution from commands to ambient intelligence

Just as games evolved from text commands to contextual hints, AI interfaces could shift from command-driven interactions to ambient intelligence that proactively surfaces what's needed.

Key elements of the design

Awareness & Progressive disclosure

The interface could prioritize information based on urgency and surface the most critical information the closer you are to needing it.

Pattern recognition & relevance

Instead of displaying a bunch of notifications, the system can explain why something is relevant.

"Discussed in 4 previous syncs".

"You typically review before meetings".

"Added to agenda by engineering team".

Digital ecosystem integration

This approach shows how AI may help us aggregate and organize knowledge across tools:

Slack, Notion, Linear, etc → The system is connected and can understand these.

The system understands how these tools work together and keeps related insights together.

Beyond traditional interfaces

Ambient intelligence moves beyond alerts and search. It doesn’t wait for commands. It learns what matters to you, when it matters and brings it to you proactively.

3. Adaptive documentation: Interactive, intelligent content

Traditional documentation is static and one-size-fits-all. Whether you're a developer, product manager, or designer, you see the same content and it requires you to understand that content the same. AI could help change this.

Adaptive documentation could turn passive knowledge into an active, evolving system where content adapts based on who's viewing it. AI could help translate insights across roles ensuring the right information surfaces for who's viewing it.

From static documentation to adaptive knowledge

Static FAQs and documentation assume everyone understands information the same way. In reality:

A designer might describe an issue in terms of interaction patterns, while…

A developer might need a breakdown of logical flows and constraints, and…

A PM may just want to know how it affects timelines and adoption.

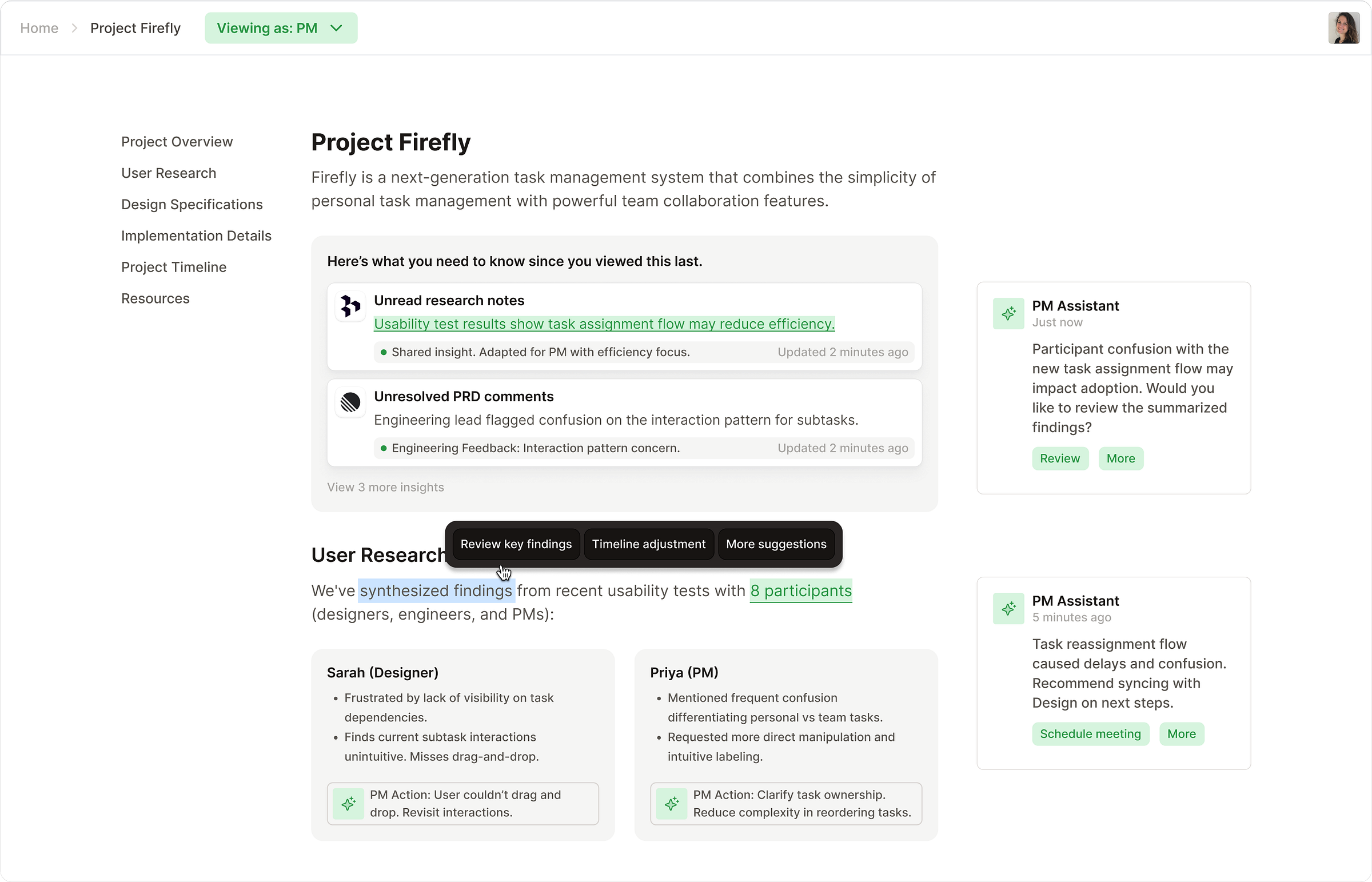

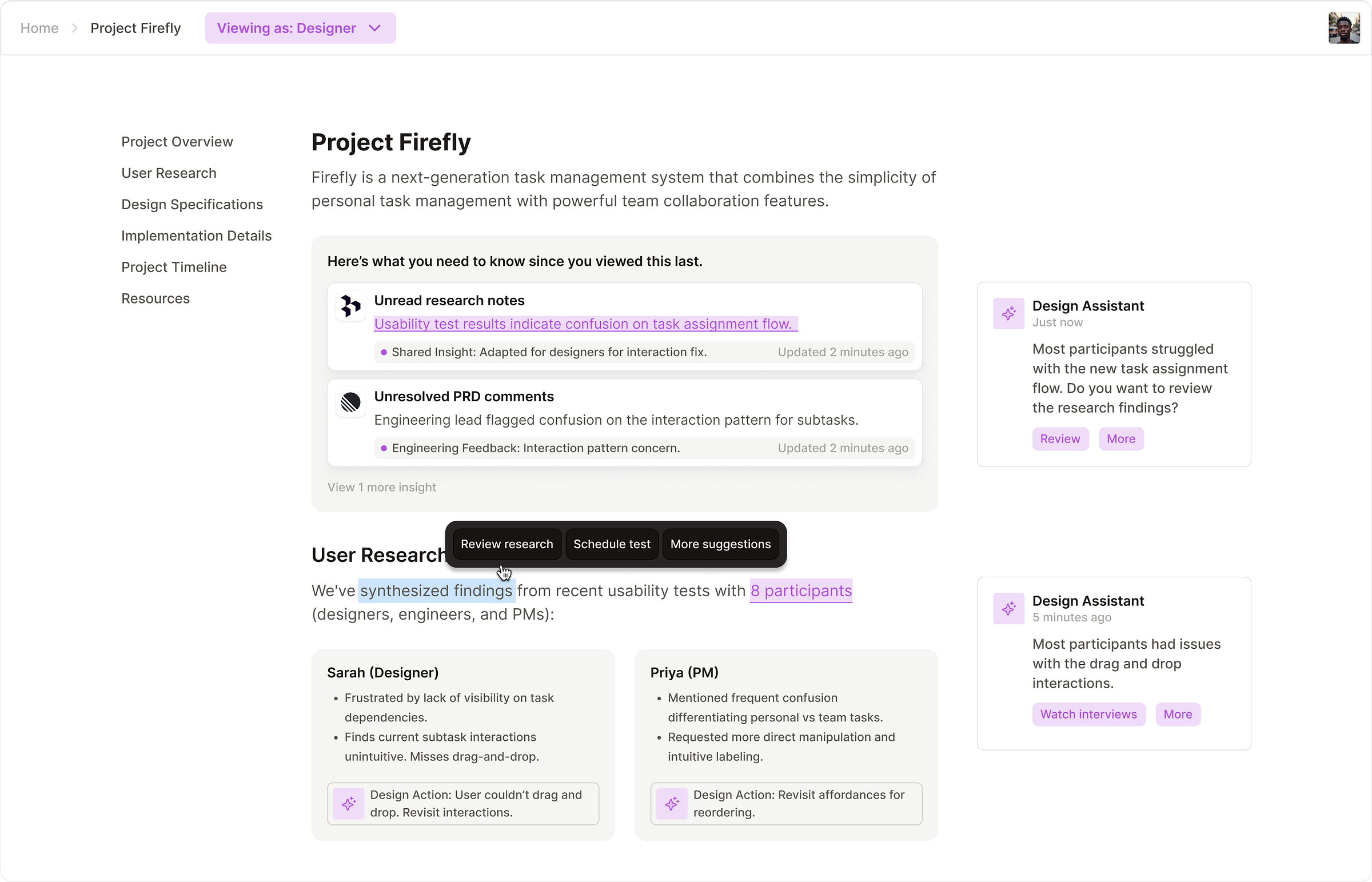

Example: Designer vs. PM views in AI-Powered documentation

In a traditional document, everyone sees the same usability research summary. But with adaptive documentation, an AI-powered system can adjust the insights based on the persona viewing it:

Designer View

A designer might see:

Insight: “Participants struggled with the new task assignment flow.”

Design takeaway: “Consider refining drag-and-drop affordance.”

Next step: “Rework the interaction model before usability retest.”

PM View

A PM, looking at the same research, might see:

Insight: “Usability issues with task assignment could slow adoption.”

Prioritization takeaway: “Estimated effort: 5 points. Priority: High.”

Next step: “Sync with design on UI refinements.”

Instead of forcing teams to translate findings manually, AI tailors insights per role, ensuring each function gets the most relevant takeaways.

The evolution from static docs to context-aware knowledge

The interface borrows from innovations in gaming history. Just as games evolved from requiring users to type "go north" to offering contextual menus of relevant actions, adaptive documentation offers contextual interactions based on what you're looking at and who you are.

Key elements of the design

AI-Driven context

Personalized insights → AI adjusts framing and takeaways based on the user’s role.

Translation layer → Ensures designers, PMs, and developers understand the same insight differently but collaborate seamlessly.

Self-updating documentation

AI tracks changes across tools (Linear, Figma, Slack, etc.) and updates knowledge accordingly.

Surfaces recent changes so teams always have the latest context.

Cross-functional understanding

Reduces miscommunication → AI ensures insights make sense for different roles.

Eliminates redundant meetings → Instead of re-explaining findings, everyone sees what’s relevant to them automatically.

Beyond static knowledge silos

Unlike traditional documentation that requires manual updates and searching, adaptive documentation synthesizes and personalizes information dynamically:

Designers → Usability research, interaction models, and visual critiques.

PMs → Business impact, prioritization guidance, and team dependencies.

Developers → Technical specs, logic flows, and API docs.

This concept imagines knowledge systems that adapt to your context, not just the content itself. Early versions may reframe language or priorities, but the real opportunity is in shaping how teams interact, collaborate, and make decisions. Everyone sees the same core insight, framed in the way that’s most actionable for their role. Designers get interaction takeaways. PMs see business impact. All from the same shared research.

Views could be inferred based on your role automatically or toggled manually. Instead of static docs, this points to a future where AI continuously synthesizes and contextualizes knowledge from tools like Figma, Slack, and Linear, helping teams work together better in real-time.

Adaptive documentation rethinks how information is delivered, shaping the framing, language, and presentation so people can quickly understand what matters and move forward.

Principles for better AI interfaces

These approaches share core principles:

Align with human nature – Leverage natural cognitive skills like spatial memory.

Reduce translation – Narrow the gap between thought and execution.

Maintain user flow – Minimize disruption and complexity during interactions.

Context-awareness – Adapt seamlessly and automatically to user contexts.

Flexible control – Allow users easy adjustments between automation and manual interaction.

The path forward: Lessons from gaming history

Text-based interfaces, while convenient, aren't the ideal long-term interaction pattern. The future of AI interactions will blend intuitive visual and spatial interactions along with precise textual commands.

So I ask you to think about your own workflows. How might they improve if designed around natural human interactions, moving beyond text prompts?

What are the biggest friction points in your workflow today? As Product Designers, Thinkers and Builders, how can we begin experimenting with solutions that move beyond text? The next generation of AI interfaces is waiting to be designed.

Let's build.